Charting a Course: Setting Terakeet's Corporate AI Policy

As Generative AI solutions have proliferated, companies are struggling to establish clear policies for where and when it’s appropriate to use these technologies. While Generative AI can be a powerful tool to automate routine tasks, improve scalability of processes, and create new products and services, its use is not without risks. We’ve all seen articles detailing the negative outcomes of over-reliance on AI, from lawyers being fined for presenting hallucinated legal cases to Google cracking down on AI-generated clickbait.

A responsible business strategy includes a clear policy to ensure AI is used ethically and responsibly. At Terakeet, I recently led our team through a process to define our corporate AI policy. In this post, I’ll share the process we used and the benefits we’ve seen from having a clear policy in place.

Understanding the Need

As the Director of Data Science, I was receiving a lot of inquiries from teams throughout the organization about AI use, messaging, and strategy. I realized that we needed a policy to guide our work and set expectations. There are several benefits to establishing a corporate AI policy:

- Clear messaging to current and potential customers shows that we’re knowledgeable about data science and prepared to use AI responsibly and appropriately.

- An internal statement builds trust in senior leadership and sets expectations around development.

- Understanding our data science strategy supports our teams to suggest and prioritize projects.

Setting the Stage

The Spectrum of Data Science

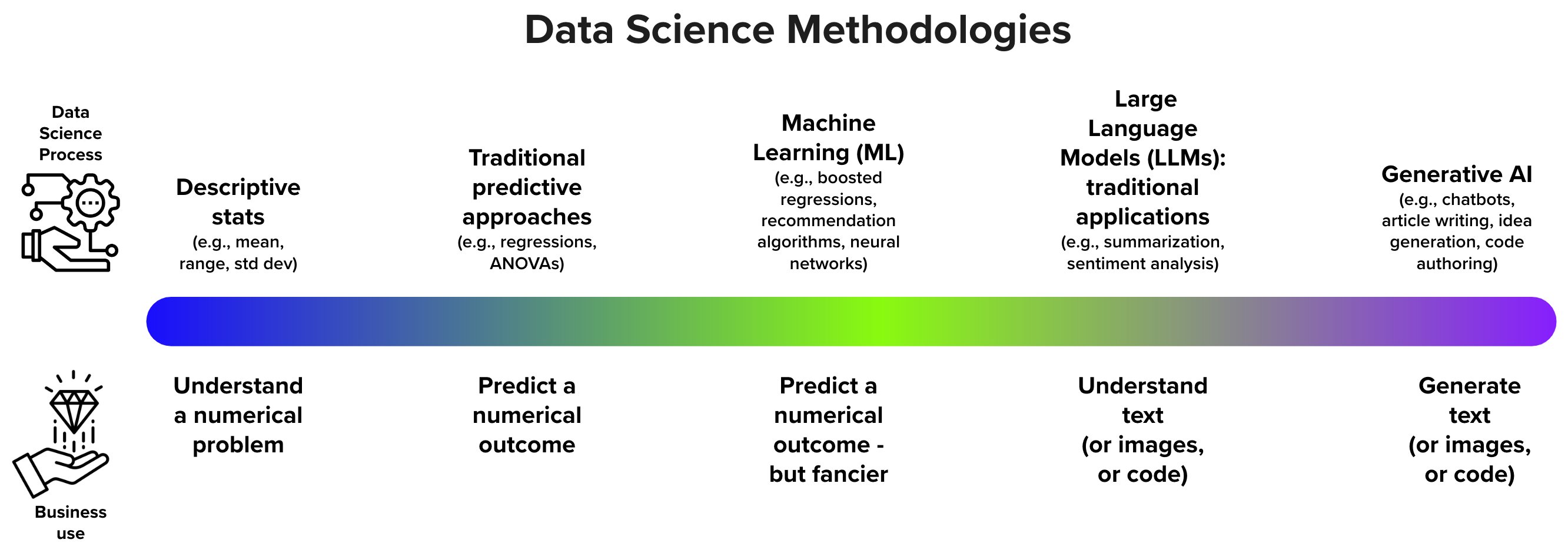

To guide the conversation, I started by positioning Generative AI along the spectrum of data science approaches:

Data Science Spectrum

Terakeet’s data science applications span the full spectrum of available methodologies. For example, descriptive statistics are frequently used to provide analytics for client reporting or basic insights to guide teams’ work. Machine Learning algorithms are the backbone of several of our core applications, such as Growth Projections and Keyword Topic Clustering. We use traditional applications of Large Language Models such as classification and summarization to power the Total Addressable Market semantic similarity scoring, the URL Intent Classifier, and a range of other models.

Generative AI

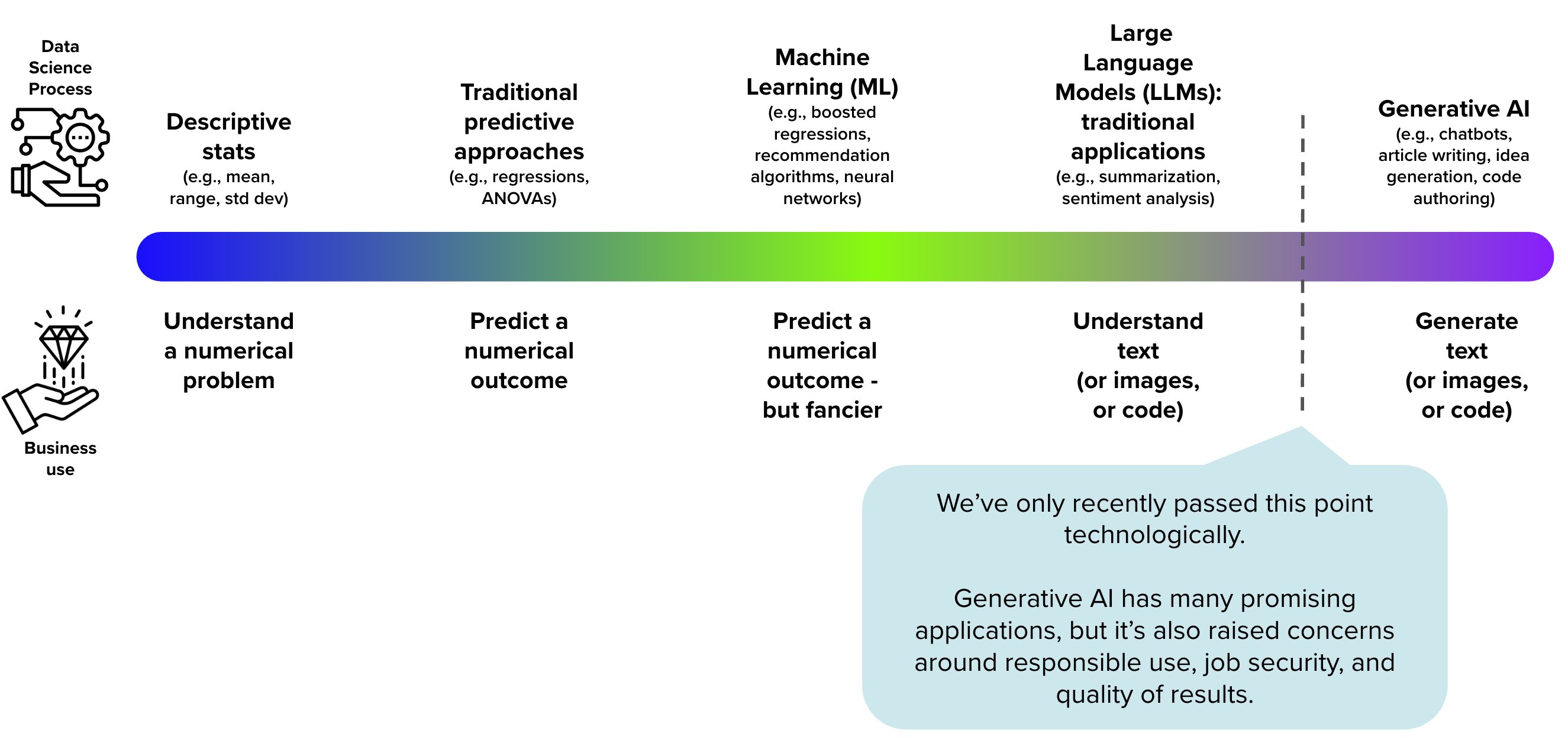

Generative AI is a relatively recent addition to the Data Science toolkit. While Generative AI models have been under development for decades, they have only recently reached a level of performance supporting complex applications.

Generative AI

Since the release of ChatGPT in late 2022, Generative AI has been applied to many use cases - but it’s also raised concerns around responsible use, job security, and the quality and accuracy of results.

Adoption Scenarios for Generative AI

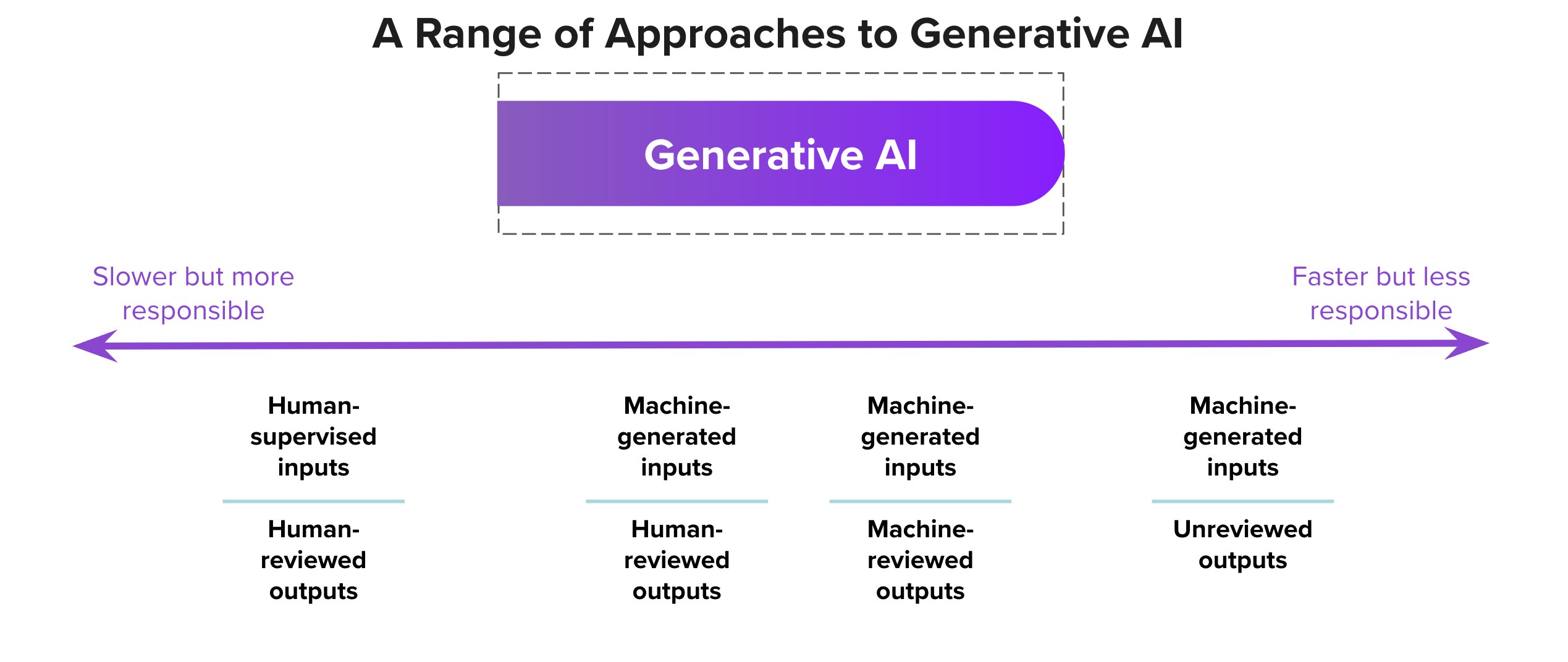

Zooming in on the Generative AI space in the Data Science spectrum, we can see there are a range of adoption scenarios.

Generative AI Adoption Approaches

More Conservative: Slower, but more Responsible

On the more conservative side of the spectrum, humans are responsible for both constructing the inputs to Generative AI models and also reviewing the outputs before dissemination. An example would be:

- Content specialists identify topics for content drafts,

- Generative AI creates an outline to guide a human author, and then

- A human author writes an article for publication, possibly with AI assistance for grammar and tone.

This type of approach is slower, as there’s more time dedicated to human review, but it’s also more responsible: the human reviewer can catch errors or biases in the AI-generated content.

More Aggressive: Faster, but Riskier

On the opposite side of the spectrum, we have a fully automated system where the inputs are generated by AI and the outputs are directly employed without human review. An example of this would be:

- A content recommendation algorithm suggests a topic,

- Generative AI is used to create drafts, and then

- Drafts are directly published without review.

Such a system would allow for greatly reduced time to deliverables, but it also introduces risks: Generative AI systems are prone to hallucinations, and they may create content that is factually incorrect, more syntactically complicated, or even biased against certain groups.

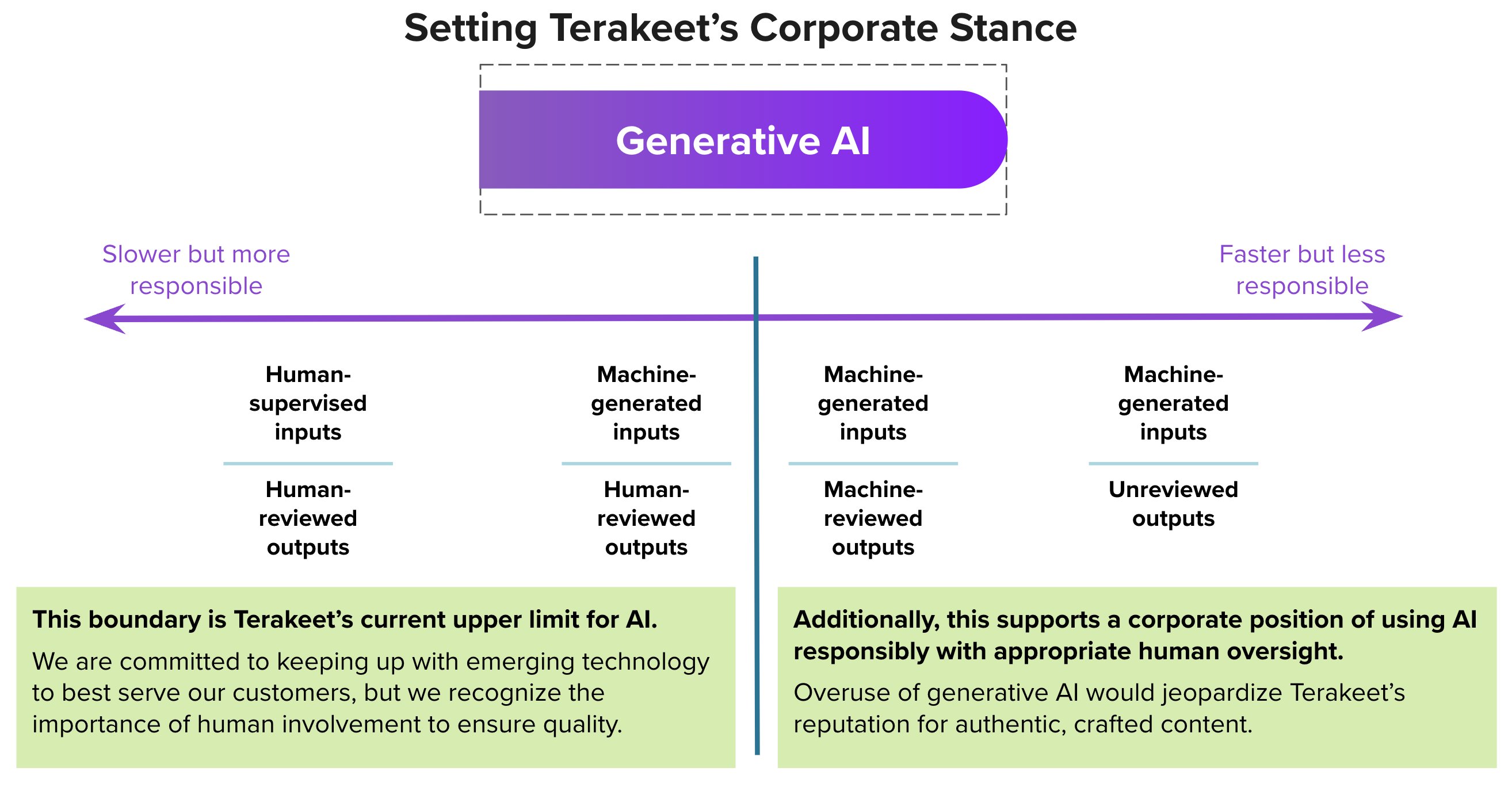

Setting Our Policy

This range of approaches was the starting point for our policy discussion. Our senior leadership team debated the merits of policy stances along the spectrum; we wanted to ensure our teams are supported to quickly and efficiently deliver high-quality work, but also that our company continues to be a leader for authentic, crafted brand content.

Generative AI Policy

Once we had settled on our stance, I communicated it to our internal teams at an all-hands meeting. My presentation followed the main points of this article: the benefits of a policy, the spectrum of available data science approaches and how Generative AI has widened the range, our current and potential future business uses for these technologies, and the risks and benefits of different adoption scenarios.

Continuing the Conversation

Our commitment is to proactively communicate to our internal teams as well as our customers about our AI use.

- As technology and the market evolves, we will communicate to employees any changes to the boundaries of generative AI usage.

- We will maintain transparency around our use of generative AI in our technology through ongoing communication around our software roadmap as well as regular updates about AI in particular.

We also established an AI Task Force with members from senior roles on the Data Science, Product Marketing, Delivery, Customer Success, and Brand identity teams. This group meets monthly to discuss planned AI projects, incoming needs from customers, and any changes in the market that might impact our AI stance.

Additionally, we collaborated with our legal team to release a governance document stipulating that no confidential information relating to Terakeet or our clients may be input into any Generative AI tool.

Our policy is a living document, and we expect it to evolve alongside the market and technology. Our AI Task Force will stay abreast of internal and external needs to ensure our policy remains relevant and applicable as we continue to grow and innovate.